This is another one of those not-too-exciting posts, but one that sums up a lot of stuff I've done over the weekend.

Filter

I started off with work on the filter. As mentioned in the previous post, it now tracks the keyboard. I also added a filter envelope, but envelope amount is still missing.

I then did some quick calculations on the consequences of using +/-12V instead of +/-15V.

First of all, the filter cutoff mixer has a 270k resistor to V-. It raises the initial cutoff frequency by 5.55 oct with -15v, and 4.44 oct with -12V. Similarly, we have a trimmer between +/-V which lets us trim the same range. We do lose some range when reducing from 15 to 12V, but I have not yet tried trimming the filter so I'm unsure if it's an issue.

Second, the reference current in the cutoff exponential converter uses a 1M5 res to -15V to generate a 0.01mV current. This will now be 0.008mV, and it linearly offsets the cutoff frequency. This can be trimmed away using the cutoff and tracking trimmer, but again I have not tried to do this.

Third, the dead bands for resonance and VCA, e.g. the minimum voltage needed to start changing the params, will change slightly - from 12mV to 12mV for resonance, and 10mV to 8mV for the VCA.

All in all, the filter will probably work well though it needs a bit more testing.

DCO

I decided to get the second DCO going yesterday. I had to find and set up my windows computer, but other than that programming it went without issues. I decided not to try to figure out more about why it outputs 5V instead of 10V at the moment, as I was simply too tired to look at the code. UPDATE: It is because the 3.3Vref combined with using a 22k load on the DAC actually turns it into a 0-2.5V range instead of 0-5, so the charging current is half of what it should be)

DCO calibration

I did however look into how to fix the calibration circuit. The DCO uses its Vcc as a reference when trying to calibrate wave amplitude, and also for detecting "overflows" when pitch changes.

The PIC16F18346 has an internal 6bit DAC which in my case uses Vcc as reference. This has been set to 26/32 * Vcc for calibration and 27/32 * Vcc for overflow detection.

When Vcc changes from 5V to 3.3V, calibration threshold goes from 4.0625V to 2,68125.

The onboard calibration circuit is simply a resistor divider with a 100k and 68k resistor. It divides a 10V input down to 4.048V, so by using the 4.0625V threshold we should be able to get a 0-10V output from the DCO.

When using Vcc = 3.3V instead, we need to make divider divide 10V down to approx 2.68125. As both ends of the 68k resistor are exposed, we can but another resistor in parallel. Using a combination of a 75k and 3.3k resistor (in series, so 78.3k), we end up with 2.668V from a 10V input, which is close to the same "error" as the 5V version.

As for overflow detection, 27/32 * 3.3 = 2.78, which means the output will reach 10.4V before overflowing. That's about 4% higher than the correct value. I'm not sure if this is audible, we just have to test it.

DCO DAC ref voltage

This cannot be higher than 3.3V when running with Vcc = 3.3V. I have yet to calculate what this means for maximum charge current etc.

DCO 2 on voice card controller

Now, this opened up a giant can of worms unfortunately. Once DCO 2 was in place, the top of the saw wave from both oscillators got cut off at around 4V. As the only thing connecting the two DCOs are the sync lines, I immediately suspected that something was going on there.

I completed the code for turning on and off sync, but that didn't help, so I decided to move DCO2 from its socket to a breadboard and run breadboard wires to the socket, to better be able to connect/disconnect the sync lines.

Unfortunately, while doing this, I swapped the DGND and +5V pins on DCO2. Fearing I broke something, I disconnected DCO2 and started testing the rest of the circuit.

Now I noticed a sort of metallic sound on the attack portion of each note. This led me through a wild chase to pin down the problem. I swapped the DCOs, fortunately DCO2 still worked. I figured that the sound originated AFTER the filter and then realised it came from the envelope VCA CV. I tried replacing the CV DAC and sample & hold boards as well, with no result.

I then turned to the code and did a git diff. I quickly realised that I unintentionally updated DCO2 twice, once to the real CV and then to 0 to disable changes while debugging sync. This should really not have anything to do with the CV generation, but after a lot more debugging, it turns out that it somehow messes with the timings. It looks like every second update of the CV failed, and from the look of it one or more of the bits sent to the DAC was missing, or at least that is my theory. Removing the extra update fixed the metallic noise.

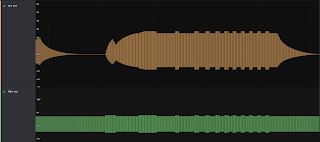

|

Envelope CV (bottom). The signal drops to an almost fixed level for every second update. The level follows the curve of the envelope, which leads me to believe that it's a case of missing bits in the DAC value.

|

I am quite certain that this issue will come back and bite me later!

DCO sync

Now, after all this, I finally went back to debug sync. I did realise that what was probably going on was something I've seen before - trying to input a voltage higher than Vcc to a pin on the MCU makes weird stuff happen. That was why I wanted to move DCO2 to the breadboard in the first place. I realised I've connected DCO out to the sync pin of the other DCO, meaning that it would see up to 10V! After carefully connecting DCO2 again, I could confirm that this was in fact the issue - though not exactly how I expected it to be.

What happens is that the OUTPUT of the DCO gets cut of at around 4V when connected to the sync pin. When I instead connected sync to the timer output of the other DCO, sync started working perfectly. I remember now that this was how I intended it to be done between two DCOs, I only opened up the posibility to also use an analog wave as input, but forgot that it has to be limited to 0-3.3v.

|

| Hard sync. The waves are in phase in reality, I've only tapped them at different points in the circuit. |

Missing +/-12V on the DCO debug headers

This is just a tiny thing, but it threw me off for a bit. I have forgotten (?) to connect the analog power pins of the DCO to the debug pins next to the socket, so I didn't get any power to the DCO on the breadboard. This is fixed in v1.2.1 of the controller board.

Unexpected refresh rate and S&H caps

I can't remember what rate I was aiming for, but right now the S&H runs at 196kHz. That means around 12kHz per channel. I only wonder because I have a commented out line above it with twice that rate.

When replacing the S&H board, I realised that the one I have in the circuit uses 2.2nF caps. Not sure if I used the same board for testing in my

earlier post, but it explains why I've seen a few examples where we couldn't charge the cap fast enough. However, it also tells me that 2.2nF is probably fine :)

Envelope nonlinearity and missing updates

This surprised me. When debugging the metallic noise, I realised that the envelope CV is not perfect, it has small drops along the way where it reverts to the previous sample instead of the next.

Turns out this is because the calculation of next step takes too much time, though not EVERY time for some reason, so the double buffered output array has not been updated and the old value is sent instead.

|

| The output drops to the previous value for one cycle, then goes up again.

|

I added a counter that is incremented when this happens, and it looks like it actually happens A LOT. Matrix recalculations simply take too long, even with the very small number of params I'm using. This is extremely disappointing and something I need to look into

Here is a way of getting an average without constantly summing several numbers. I can use this to get average cycle time. If it overflows I can use two levels, first averaging 1000 and then averaging those again.

Update: It seems one update cycle takes about 80us. As it is run 12000 times per seconds, the updating alone takes 960000us, or 0.96s per second. This does of course include the time it takes to update the output, as that is interrupt based and happens during the calculations, but we need to look at what is actually taking so long. 80us should give us at least 600 * 80 = 48000 instructions to "play" with.

Testing using Little Phatty

I also had time to do a bit of testing and measuring with the Little Phatty.

Pitch bend

First of all, using the pitch bend on the LP made the stepping sounds go away, just as expected. The MPK25 simply does not have high enough resolution.

Clicks when filter keyboard tracking is off

I also confirmed that the LP makes some similar clicking sounds as the XM8 when filter keyboard tracking is turned off. Not entirely unexpected.

Pitch range

The LP pitch range is approximately 26Hz to 31000Hz when only using the keyboard - Osc set to 16' and lowest key + mod wheel all the way down = 26Hz, 2' Osc, highest key and mod wheel up = 31kHz (I'can't hear the last 5 semitones, and the last ones are pure sines on the scope).

The MPK25 sends midi note 0 to 120. Using midi from the MPK25 I was able to get down to approx 8.3Hz. As for the top, I can't get past oct#4 G# for some reason, and the pitch bend started working in the opposite direction??

I had some issues where bend up did not work for note 120, for 119 it works a bit so not entirely sure what is going on. I can't see from my notes if this was in fact for the LP or for XM8 though!

Electric Druid VCDO range

Juno range

The Juno apparently covers the entire midi spec range, by having a clock divider on the master clock for the two lowest octaves. It stays within 6 cents of the perfect pitch for all notes except midi note 127.

More on this at Electric Druid.